#1 AI Visibility (AEO / GEO) Optimization Platform • Draft-Safe Execution

Be the brand AI cites — and turn that visibility into revenue.

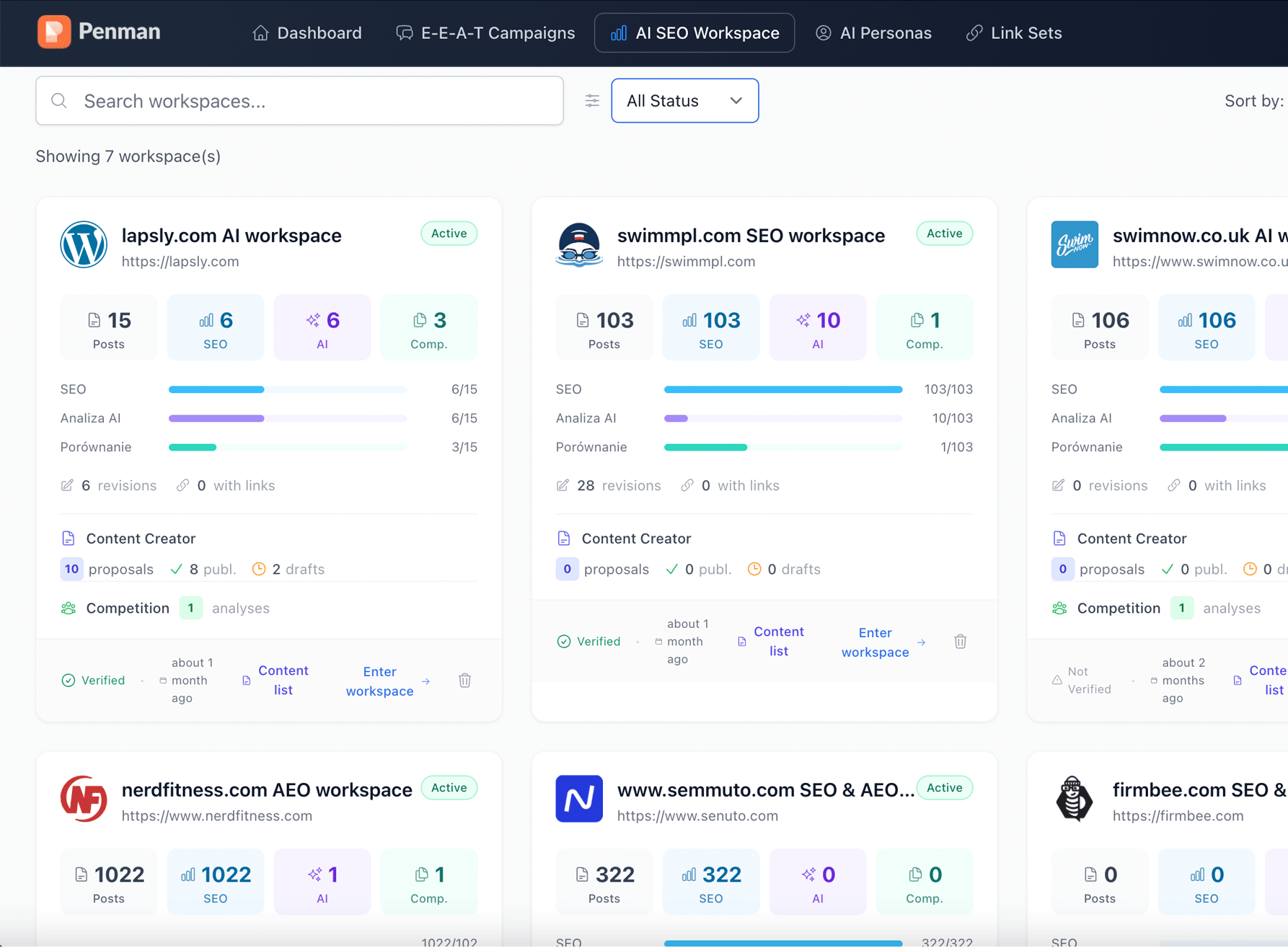

PENMAN helps you become a trusted source in AI answers by improving how your pages are understood and cited. It shows where you’re missing, what competitors have that you don’t, and turns the fixes into ready-to-publish WordPress drafts — so you drive more qualified traffic, more leads, and more sales.

Sources + relevance tiers + included vs ignored.

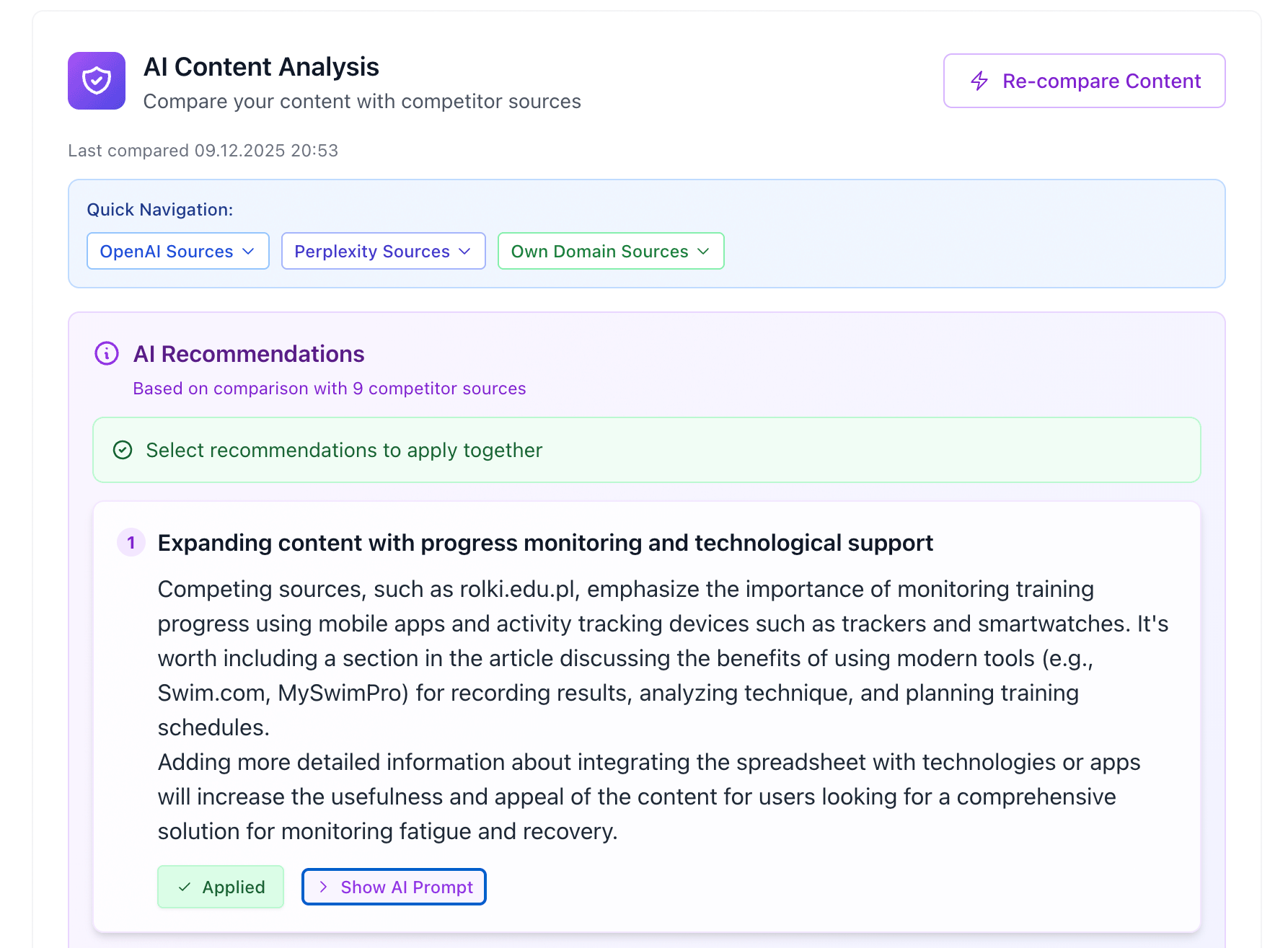

Compare against sources AI already uses.

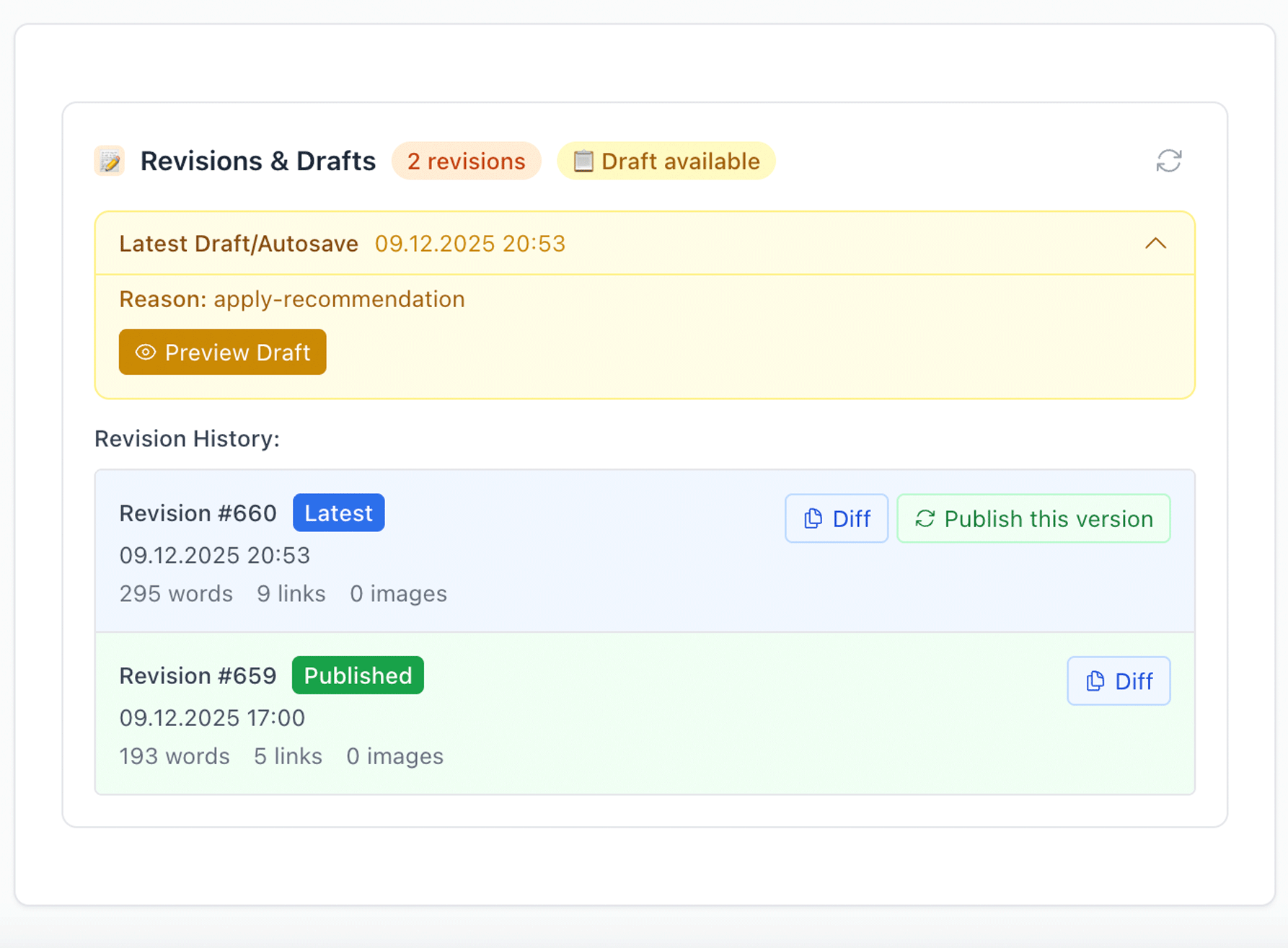

Draft-first publishing with diffs and rollback.

Trusted by teams who optimize content responsibly

APenman helps teams optimize for AEO/GEO by turning visibility into a structured, explainable workflow — from signals and model-driven actions to safe, controlled execution.

The PENMAN loop Measure → Explain → Execute

Stop guessing. Run a repeatable AEO/GEO workflow.

AI results shift fast. PENMAN helps you respond with clarity — and ship changes without risking production content.

See whether AI uses your pages as sources — plus relevance tiers and “included vs ignored” signals.

Your “competition” is the sources AI already uses. Compare coverage and get explainable recommendations.

Apply changes as drafts, review diffs, and rollback if needed. Publishing is always explicit.

PENMAN can use structured representations (entities + intent modeling) to turn AI outputs into consistent, repeatable signals — then convert those signals into scoped tasks and draft-safe changes.

Proof you can act on — not a static report

The goal is speed with control: understand what blocks selection, stage a draft, and re-run visibility to verify movement.

A simple weekly cadence to stay visible as AI outputs shift.

Pick a topic/intent set and capture sources + tiers.

Stage draft changes; review diffs with your team.

Track movement in preference signals over time.

Teams use PENMAN to move fast without breaking trust

Low-drama improvements: explainable signals, clear tasks, and a safety layer that respects editorial control.

“We stopped debating what to change. The source set made it obvious — and drafts kept production safe.”

“Explainable recommendations beat generic advice. We shipped small batches and re-verified quickly.”

“The governance layer matters. Diffs, rollback, and controlled execution made this usable for clients.”

Pricing model Subscription + credits for AI actions

Start small. Scale with usage.

Plans give access to the system. Credits scale actions like visibility runs, comparisons, recommendations, and draft generation — without promising outcomes like guaranteed citations or stable inclusion.

Teams validating AI visibility on key pages and topics.

Repeatable workflow runs and controlled execution — not “rankings.”

Draft-first changes, diffs, revisions, and rollback.

Measure AI visibility today — ship safely tomorrow.

Start with AI Visibility. Convert gaps into drafts you approve. Re-run to verify movement — without risky automation.